Module 1

Multimodal University

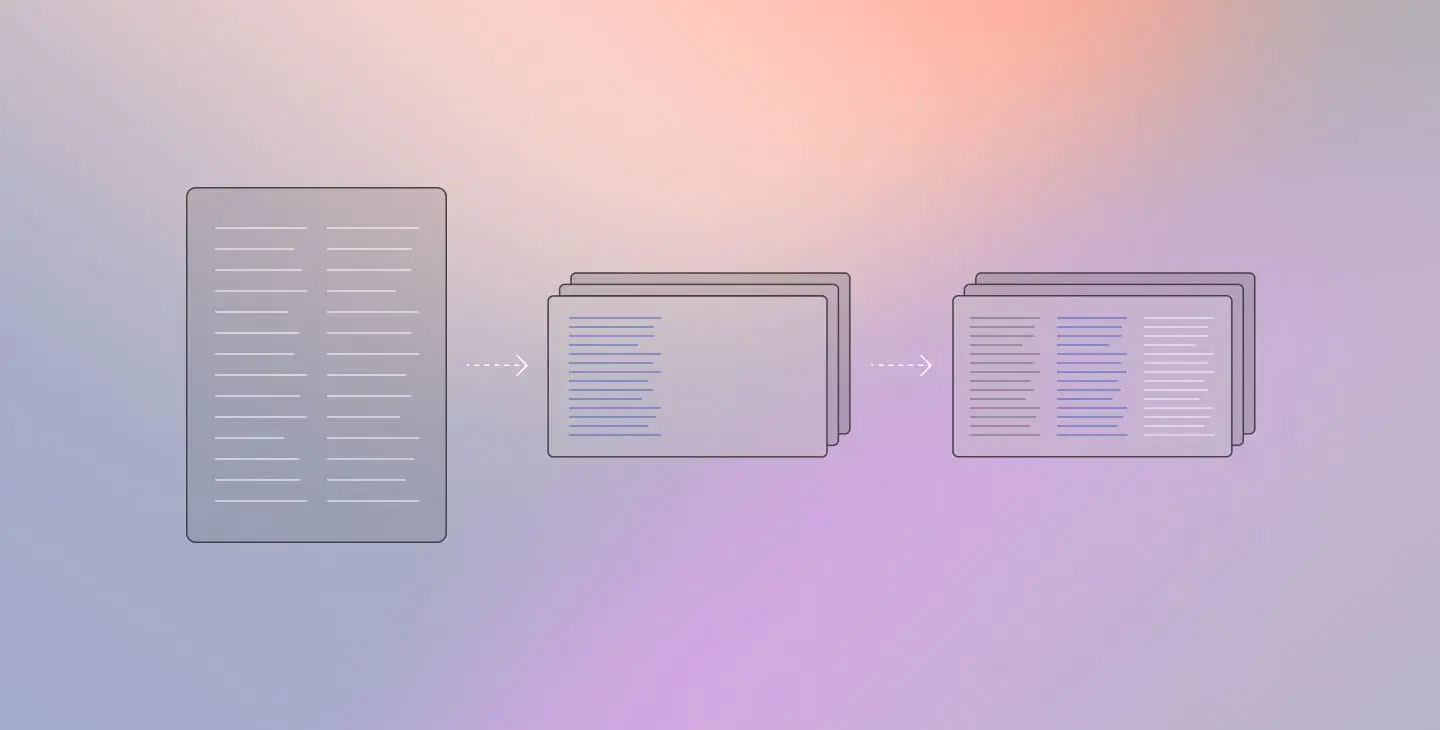

Welcome to Multimodal University, your premier learning destination for mastering multimodal AI development. Designed for developers and technical professionals, our curriculum provides comprehensive resources and hands-on guidance for building systems that understand text, images, audio, and video.

Join us to master the technologies powering the next generation of AI applications and start building sophisticated multimodal systems today.